Mask = cv2.bitwise_xor(mask_black, mask_black_and_gry) Mask_white = cv2.bitwise_not(mask_black_and_gry) _, mask_black = cv2.threshold(gry,0,255,cv2.THRESH_BINARY_INV) Gry = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) Img = cv2.imread("C:/Users/ranz/Desktop/1.bmp")

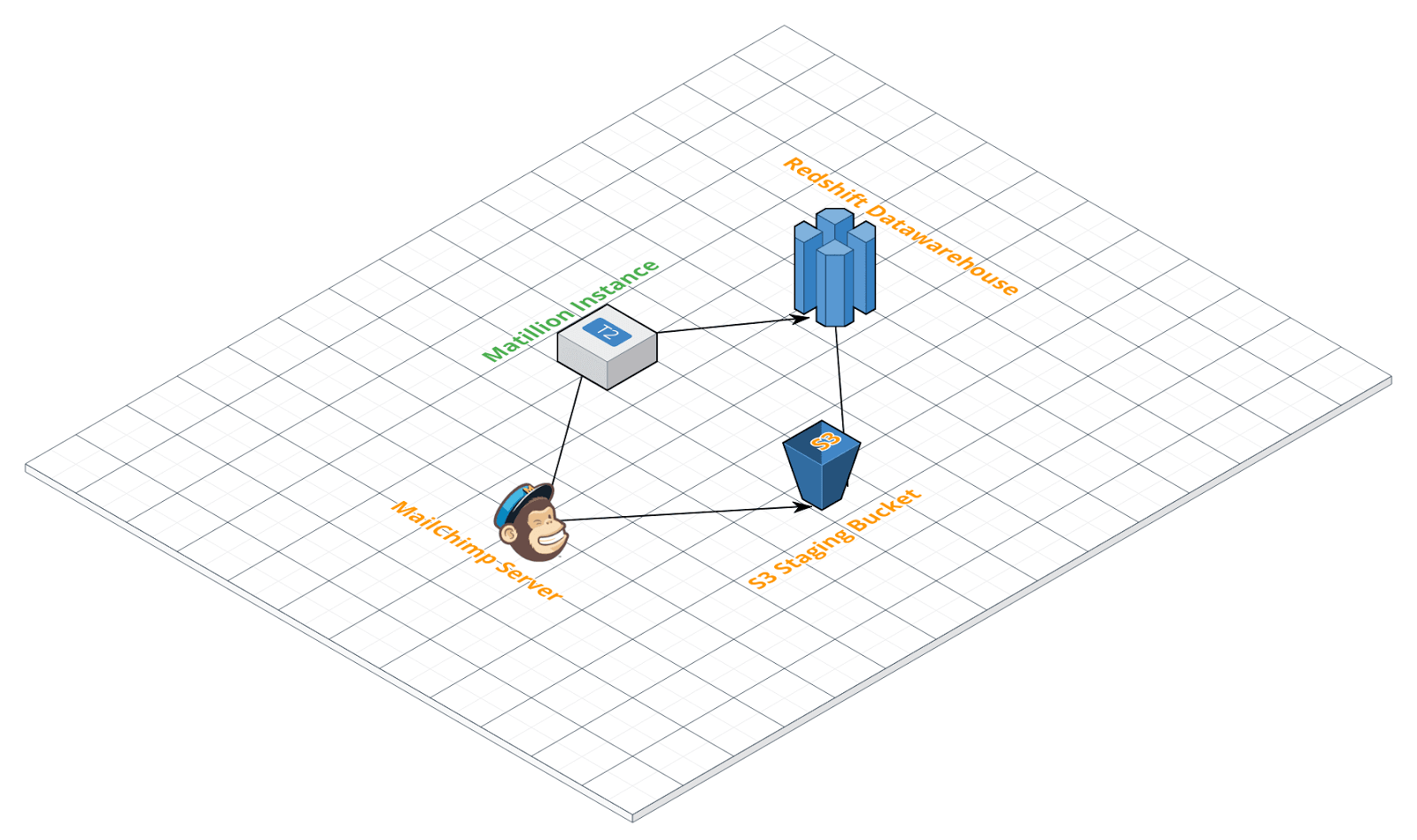

Question: Is query size 100 KB soft limit constraint configurable within our cluster/SDK or is it hard limit set on Amazon side?

I have not met any similar error while executing the same SQL query via standard SQL client connected to Redshift engine.We need to understand better this Redshift infrastructure limit before applying changes and constraints in business logic.We understand that having SQL query over 100 KB smells by poor queries design and we are going to reduce those queries size.

Our system may generate many SQL SELECTs connected together via SQL UNIONs with sorting rules on UNIONs level - that's reason why we have reached 100kB query size limit.Issue: Our Redshift Java SDK queries return ValidationException: .: Cannot process query string larger than 100kB Zbynek R Asks: Redshift Data API query statement size limited to 100 KB

0 kommentar(er)

0 kommentar(er)